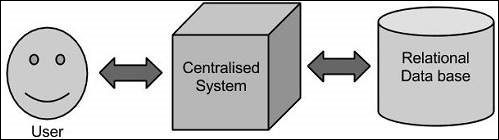

Traditional Approach

In this approach, an enterprise will have a computer to store and

process big data. Here data will be stored in an RDBMS like Oracle

Database, MS SQL Server or DB2 and sophisticated softwares can be

written to interact with the database, process the required data and

present it to the users for analysis purpose.

Limitation

This approach works well where we have less volume of data that can

be accommodated by standard database servers, or up to the limit of the

processor which is processing the data. But when it comes to dealing

with huge amounts of data, it is really a tedious task to process such

data through a traditional database server.

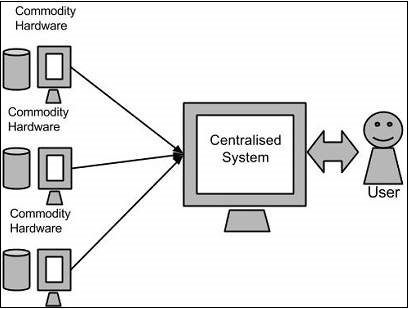

Google’s Solution

Google solved this problem using an algorithm called MapReduce. This

algorithm divides the task into small parts and assigns those parts to

many computers connected over the network, and collects the results to

form the final result dataset.

Above diagram shows various commodity hardwares which could be single CPU machines or servers with higher capacity.

No comments:

Post a Comment