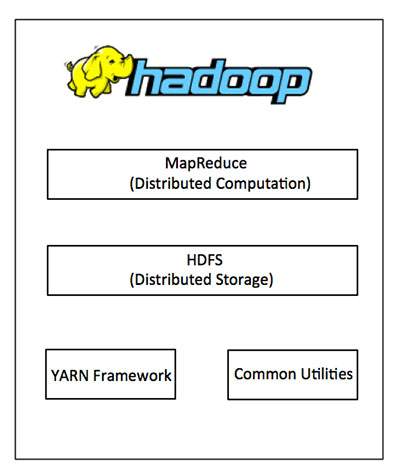

Hadoop framework includes following four modules:

- Hadoop Common: These are Java libraries and utilities

required by other Hadoop modules. These libraries provides filesystem

and OS level abstractions and contains the necessary Java files and

scripts required to start Hadoop.

- Hadoop YARN: This is a framework for job scheduling and cluster resource management.

- Hadoop Distributed File System (HDFS™): A distributed file system that provides high-throughput access to application data.

- Hadoop MapReduce: This is YARN-based system for parallel processing of large data sets.

We can use following diagram to depict these four components available in Hadoop framework.

Since 2012, the term "Hadoop" often refers not just to the base

modules mentioned above but also to the collection of additional

software packages that can be installed on top of or alongside Hadoop,

such as Apache Pig, Apache Hive, Apache HBase, Apache Spark etc.

No comments:

Post a Comment